Complete Guide to Snowflake Architecture: Layers, Data Flow, Security Features, Benefits, and Drawbacks

Snowflake has transformed the landscape of cloud data warehousing, offering unparalleled flexibility, scalability, and performance. This guide breaks down the essential components of Snowflake’s architecture, explains its data flow and security features, and highlights its key benefits and potential challenges. Whether you’re a data engineer, architect, or business stakeholder, this article provides a definitive understanding to help you leverage Snowflake optimally.

What is Snowflake Architecture?

Snowflake is a cloud-native data platform designed exclusively for cloud infrastructure. Its architecture distinguishes itself by separating compute, storage, and cloud services into three interconnected but independent layers. This design facilitates elastic scalability, concurrent processing, and streamlined data management, overcoming the limitations of traditional monolithic or on-premises warehouses.

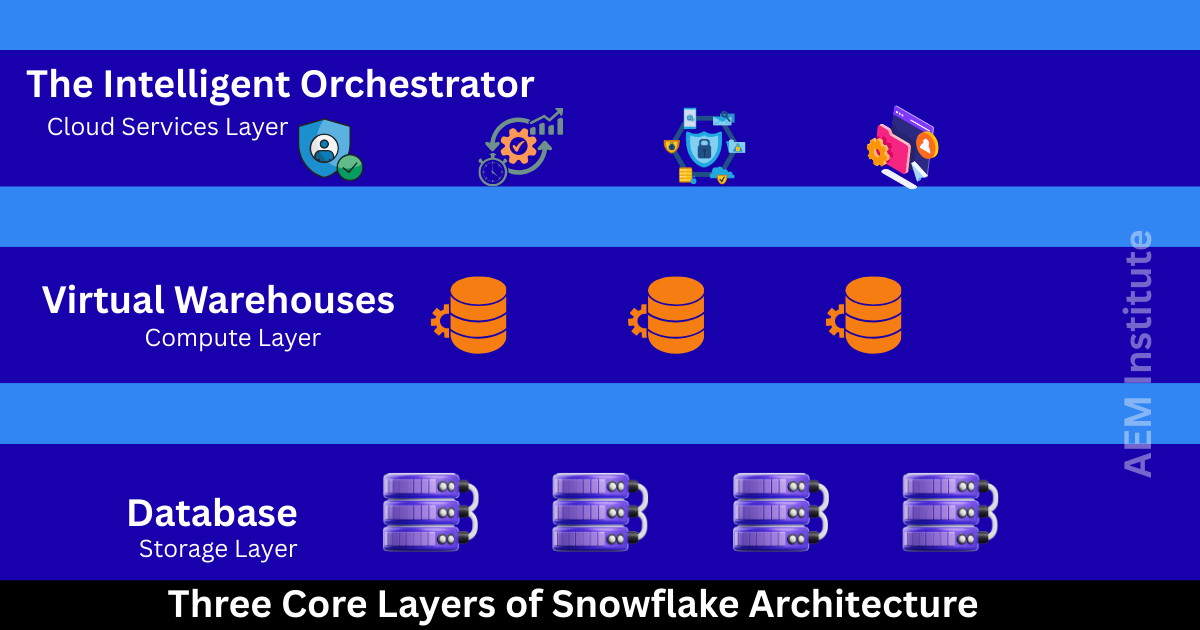

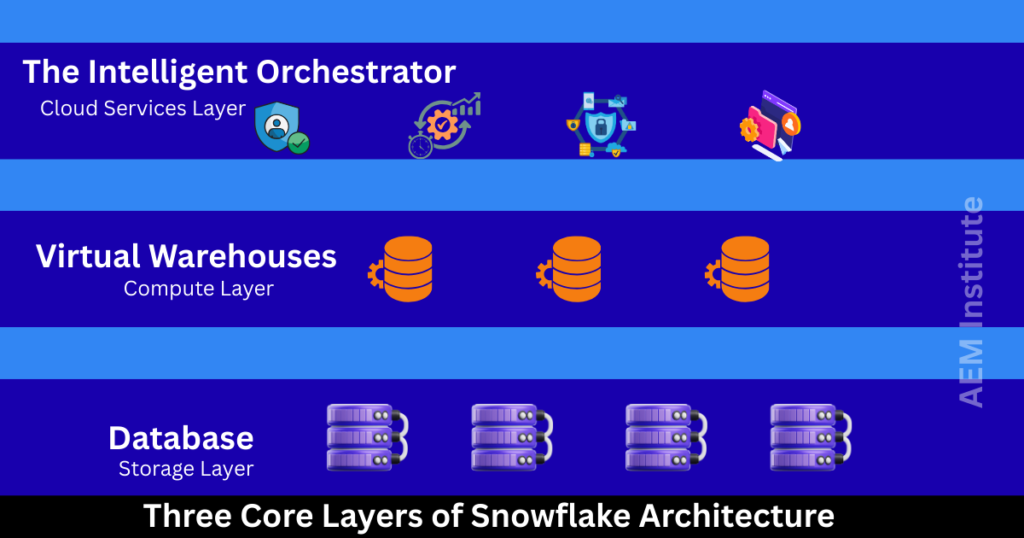

The Three Core Layers of Snowflake Architecture

1. Storage Layer: The Foundation of Data Persistence

The Storage Layer is Snowflake’s centralized repository for all data.

- Cloud Object Storage: Data resides in third-party cloud object storage services (such as AWS S3, Azure Blob Storage, or Google Cloud Storage), offering durability and elasticity.

- Columnar Storage with Micro-Partitioning: Data is stored in compressed, columnar format and divided into micro-partitions. This partitioning enables faster data access and efficient pruning during queries by scanning only relevant partitions.

- Automatic Management: Snowflake manages file size, compression method, and clustering automatically, aiming to optimize storage without requiring manual intervention.

- Zero-Copy Cloning: This innovative feature allows quick creation of database clones without duplicating actual data, reducing storage costs and accelerating development.

- Independence from Compute: Storage scales independently of compute resources, allowing businesses to optimize costs and resources flexibly.

2. Compute Layer: Elastic and High-Performance Query Processing

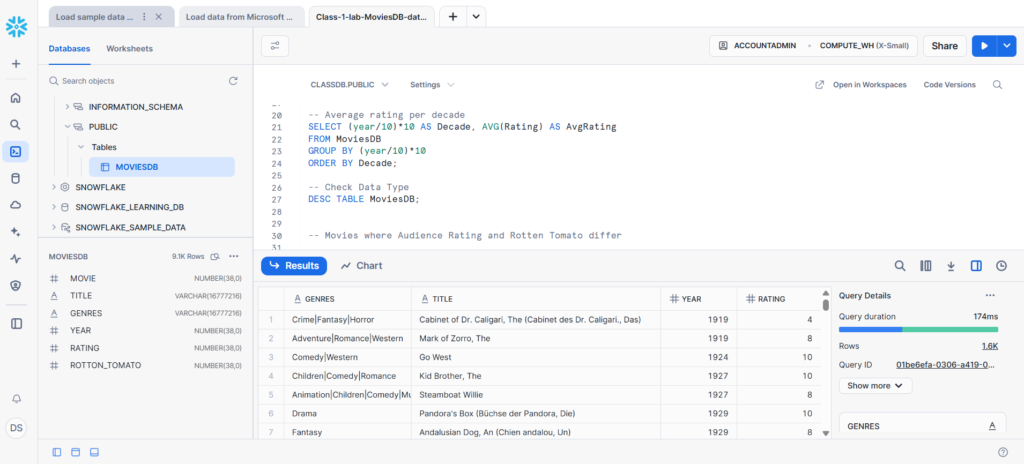

The Compute Layer consists of virtual warehouses—dedicated, isolated clusters that execute SQL queries.

- Virtual Warehouses: Each virtual warehouse is an MPP (Massively Parallel Processing) cluster, processing queries in parallel and independently from other warehouses.

- Elastic Scaling: Warehouses can dynamically scale up or down based on workload demand, supporting peak loads and reducing costs during idle times.

- Concurrency Without Contention: Multiple virtual warehouses allow several user groups or processes to work simultaneously without performance degradation.

- Query Optimization: Snowflake optimizes queries by leveraging metadata and statistics, minimizing data scanned via intelligent pruning.

- Auto-Suspend and Auto-Resume: Warehouses can automatically pause when idle and resume on-demand, optimizing cost-efficiency.

3. Cloud Services Layer: The Intelligent Orchestrator

This layer manages everything from metadata to security and infrastructure.

- Metadata Repository: Maintains details of all database objects, schemas, users, and transactions, enabling quick access and efficient query planning.

- Query Parsing and Optimization: Translates SQL commands into efficient query execution plans, distributing tasks across compute clusters.

- Security and Access Control: Handles user authentication, authorization, auditing, and role-based access management.

- Resource Management and Billing: Orchestrates resource allocation and tracks usage for billing, ensuring transparency and control.

- Data Sharing and Collaboration: Enables secure, live sharing of data sets between accounts without data duplication.

- Transaction Management: Provides ACID-compliant transactions, supporting data consistency and reliability across distributed systems.

How Data Flows Through Snowflake Architecture

- Data Ingestion: Data from various sources (databases, cloud platforms, IoT, APIs) is loaded via batch jobs or real-time streaming into the storage layer.

- Storage Organization: The data is automatically compressed, micro-partitioned, and stored securely in cloud object storage.

- Metadata Registration: Cloud Services layer catalogs the data, updating metadata and statistics to enable rapid query planning.

- Query Request: SQL queries pass through Cloud Services, which authenticates users and optimizes queries.

- Query Execution: Virtual Warehouses process queries in parallel, accessing only relevant partitions to optimize speed and resource use.

- Result Delivery: Query results are sent to users or applications for analysis, reporting, or further processing.

- Real-Time Sharing: Authorized users can securely share live data without duplication via Snowflake’s data sharing capabilities.

Snowflake’s Comprehensive Security Features

Security is integrated throughout the architecture:

- Authentication & Access: Supports SSO, OAuth, Multi-Factor Authentication (MFA), and granular role-based access controls.

- End-to-End Encryption: Data is encrypted both at rest and in transit using AES-256 standards.

- Network Security: Offers private connectivity options, network policies, IP whitelisting, and firewall rules.

- Audit & Compliance: Maintains thorough activity logs, supports regulatory standards (SOC 2, HIPAA, PCI DSS, FedRAMP), and offers real-time anomaly detection.

- Secure Data Sharing: Enables controlled data access between accounts without copying or moving data, reducing risk.

- Continuous Monitoring: Detects unusual activity and enforces security policies dynamically.

Real-World Use Cases of Snowflake Architecture

- Enterprise Data Warehousing: Centralizes organizational data for reporting and analytics.

- Real-Time Analytics: Supports streaming and event-driven data pipelines for timely insights.

- Business Intelligence & Data Science: Seamless BI tool integration and advanced analytics with scalability.

- Data Lakes and Data Mesh: Unify distributed and variously structured data sources.

- Data Collaboration: Facilitates secure, governed data sharing across departments and partners.

- ETL/ELT Optimization: Efficient transformations using scalable compute resources.

Pros of Snowflake Architecture

- True Elasticity: Compute and storage scale independently and automatically, meeting demand instantly.

- Massive Concurrency: Supports many users and workloads transparently without resource contention.

- Advanced Performance: Micro-partition pruning, caching, materialized views, and automatic optimization enhance responsiveness.

- Flexible and Cost-Effective: Auto-suspend and pay-per-use pricing reduce waste.

- Simplified Management: No infrastructure to manage, with automated tuning and scaling.

- Robust Security Posture: Enterprise-grade security embedded at every layer.

- Innovative Data Sharing: Enables real-time, zero-copy sharing across accounts.

- Support for Semi-Structured Data: Native processing of JSON, Avro, Parquet, enabling unified analytics.

Cons and Challenges of Snowflake Architecture

- Cost Complexity: Variable pricing may lead to unforeseen costs without careful monitoring.

- Cloud Vendor Dependence: Fully reliant on third-party cloud infrastructure—service disruptions can impact availability.

- Lack of On-Premises Option: Not suitable for organizations requiring a fully private, on-site solution.

- Learning Curve: Advanced features and optimization strategies require training and experience.

- Community Size and Ecosystem: Smaller than some established on-premises platforms, potentially limiting resources and third-party integrations early on.

🚀 Master Snowflake with the Best Training in Kolkata!

Unlock the power of Snowflake, the leading cloud data platform, with AEM Institute—Kolkata’s top-rated training provider!

- ✅ Expert-Led Training

- ✅ Hands-on Projects & Real-World Use Cases

- ✅ Certification Guidance

- ✅ Placement Support

🔹 Limited Seats Available! Call now to secure your spot!

“Transform your data career with industry-best Snowflake training at AEM Institute!” ❄️

Best Practices to Optimize Snowflake Performance and Cost

- Optimize Data Partitioning and Clustering: Leverage micro-partitioning wisely, cluster tables on frequently filtered columns.

- Use Materialized Views and Caching: Precompute common query results and leverage result caching.

- Minimize Query Scope: Select only necessary columns and filter data aggressively for faster processing.

- Monitor Warehouse Size: Match virtual warehouse size to workload; scale dynamically as needed.

- Automate Warehouse Management: Enable auto-suspend and auto-resume to reduce idle costs.

- Streamline Data Loading: Use optimized file formats (e.g., Parquet) and batch loading strategies for efficiency.

Conclusion

Snowflake’s architecture represents a paradigm shift in data warehousing—designed for agility, speed, security, and simplicity in the cloud era. Its multi-layer design decouples storage, compute, and services enabling enterprises to scale and innovate faster while maintaining robust security and control. Despite some cost and complexity considerations, Snowflake provides a future-proof data platform for organizations aiming to leverage data as a strategic asset.

By implementing Snowflake thoughtfully and utilizing best practices, organizations gain competitive advantage through faster insights, collaboration, and operational efficiency in their data journeys.

Cybersecurity Architect | Cloud-Native Defense | AI/ML Security | DevSecOps

With over 23 years of experience in cybersecurity, I specialize in building resilient, zero-trust digital ecosystems across multi-cloud (AWS, Azure, GCP) and Kubernetes (EKS, AKS, GKE) environments. My journey began in network security—firewalls, IDS/IPS—and expanded into Linux/Windows hardening, IAM, and DevSecOps automation using Terraform, GitLab CI/CD, and policy-as-code tools like OPA and Checkov.

Today, my focus is on securing AI/ML adoption through MLSecOps, protecting models from adversarial attacks with tools like Robust Intelligence and Microsoft Counterfit. I integrate AISecOps for threat detection (Darktrace, Microsoft Security Copilot) and automate incident response with forensics-driven workflows (Elastic SIEM, TheHive).

Whether it’s hardening cloud-native stacks, embedding security into CI/CD pipelines, or safeguarding AI systems, I bridge the gap between security and innovation—ensuring defense scales with speed.

Let’s connect and discuss the future of secure, intelligent infrastructure.