Are you a data analyst or working with data science? Are you looking for an use case basis knowledge for Delta Lake? Here in this article we are going to discuss about Delta Lake from Introduction to application integration of Delta Lake.

An Introduction To Delta Lake:

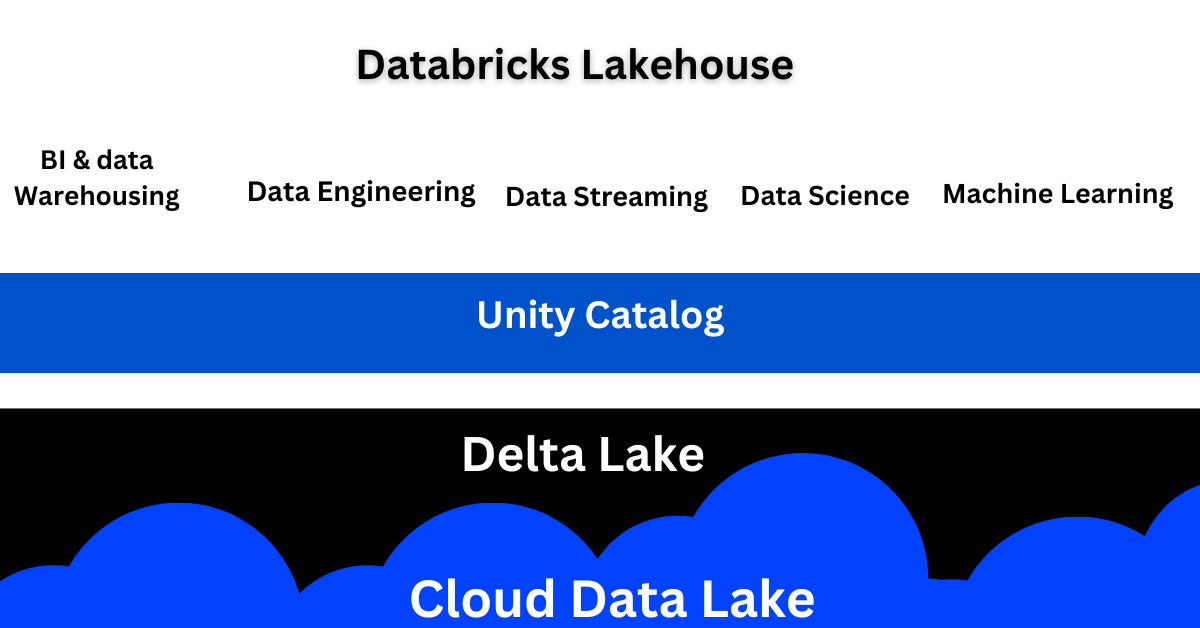

Delta Lake is an open-source storage layer that enhances data reliability, scalability, and performance in big data processing systems, particularly Apache Spark. It was developed by Databricks and introduced as an extension to the Apache Parquet file format.

Delta Lake provides ACID (Atomicity, Consistency, Isolation, Durability) transactions to data lakes, which are typically characterized by the lack of built-in transactional guarantees. With Delta Lake, you can perform atomic, consistent, and isolated operations on data, ensuring data integrity and enabling reliable processing and analytics.

Key features of Delta Lake include:

- Schema Evolution: Delta Lake supports schema evolution, allowing you to seamlessly add, modify, or delete columns in your data over time without breaking the existing data pipelines or applications.

- Time Travel: Delta Lake provides the ability to access and query the state of your data at different points in time. You can time travel to earlier versions of data, view changes over time, and perform historical analyses.

- Optimized Data Processing: Delta Lake leverages various optimizations, such as data skipping, predicate pushdown, and schema caching, to accelerate data processing and improve query performance.

- Data Versioning: Delta Lake maintains a transaction log that records all the changes made to the data, enabling full visibility and traceability of data changes. This log can be used for auditing, data lineage, and disaster recovery purposes.

- Streaming and Batch Processing: Delta Lake supports both streaming and batch processing workloads, making it suitable for real-time data ingestion as well as large-scale batch processing.

By incorporating these features, Delta Lake addresses common challenges faced in data lake environments, including data consistency, data quality, and the need for scalable and reliable data processing. It provides a unified solution for managing and processing big data, making it easier to build robust and trustworthy data pipelines.

History of Delta Lake:

Delta Lake was introduced by Databricks, a company founded by the original creators of Apache Spark, in April 2017. It emerged as a response to the challenges faced by organizations when dealing with data lakes, which lacked transactional capabilities and data reliability.

The development of Delta Lake was driven by the need to provide robustness and reliability to big data storage and processing. Databricks recognized that data lakes needed transactional capabilities similar to traditional databases to ensure data integrity and consistency. Delta Lake aimed to fill this gap and provide a unified solution for managing and processing big data.

The initial version of Delta Lake focused on addressing two fundamental requirements: transactional capabilities and schema evolution. It introduced ACID (Atomicity, Consistency, Isolation, Durability) transactions to data lakes, enabling reliable and consistent data operations. Additionally, Delta Lake supported schema evolution, allowing schema changes without disrupting existing data pipelines.

As Delta Lake gained popularity, its capabilities expanded further. Databricks introduced features like time travel, which allowed users to access and query data at different points in time. This feature provided auditability, debugging, and historical analysis capabilities to users.

Over time, Delta Lake continued to evolve, incorporating performance optimizations such as data skipping, predicate pushdown, and schema caching. These optimizations aimed to improve query performance and accelerate data processing in big data workloads.

Delta Lake became an open-source project in October 2019, when Databricks contributed it to the Linux Foundation’s Delta Lake project. This move expanded its reach and encouraged community involvement in its development and enhancement.

Since then, Delta Lake has gained significant adoption and integration with various big data platforms and frameworks. It has become a popular choice for organizations dealing with large-scale data processing, analytics, and data engineering tasks.

The evolution of Delta Lake has made it a critical component in modern data architectures, enabling reliable and scalable data storage and processing in big data environments. Its history reflects the continuous effort to address the challenges of data lakes and provide a comprehensive solution for managing and processing big data effectively.

What is the importance of Delta Lake?

Delta Lake holds significant importance in the realm of big data processing and analytics for the following reasons:

- Data Integrity: Delta Lake ensures data integrity by providing ACID (Atomicity, Consistency, Isolation, Durability) transactions. It guarantees that data operations are reliable and consistent, even in the face of failures or concurrent access. This is crucial in maintaining the accuracy and trustworthiness of data, which is paramount for decision-making and analytics.

- Schema Evolution: Delta Lake supports schema evolution, allowing seamless changes to data structures and formats over time. It enables you to add, modify, or delete columns in your data without disrupting existing pipelines or applications. This flexibility accommodates evolving business needs and simplifies data management.

- Time Travel: Delta Lake enables time travel capabilities, allowing you to access and query data at different points in time. By retaining a transaction log of all changes, Delta Lake facilitates historical analysis, debugging, and auditing. It provides the ability to explore and understand the state of data at various stages, enhancing data governance and compliance.

- Performance Optimization: Delta Lake incorporates performance optimizations to enhance processing speed and efficiency. Techniques such as data skipping and predicate pushdown minimize unnecessary data scanning during query execution, resulting in improved query performance. Schema caching further boosts performance by optimizing schema resolution and data access.

- Data Versioning: Delta Lake’s transaction log maintains a historical record of data changes, enabling full data versioning. This feature is valuable for tracking data lineage, understanding data evolution, and implementing audit trails. It supports compliance requirements, regulatory audits, and facilitates data governance practices.

- Streaming and Batch Processing: Delta Lake caters to both streaming and batch processing workloads. It seamlessly integrates with popular streaming frameworks, allowing real-time data ingestion, processing, and analytics. The ability to handle continuous data streams alongside batch processing provides a unified solution for diverse data processing needs.

Delta Lake in Azure:

Delta Lake is the default storage format for all operations on Azure Databricks. Unless otherwise specified, all tables on Azure Databricks are Delta tables. To load data in lakehouse Azure Databricks provides a number of products like,

Delta Live Tables – Delta Live Tables does not use the standard interactive execution from notebooks. It emphasizes deployment of infrastructure for production purpose.

COPY INTO – This allow SQL users to incrementally ingest data from cloud object storage into Delta Lake tables.

Apache Spark – Apache Spark is used to load data from external sources into Delta Lake tables.

Add data UI – The add data UI allows you to easily load data into Azure Databricks from a variety of sources.

Databricks Partner Connect – You can configure Databricks Partner Connect through the add data UI to load into Delta Lake tables from partner data sources.

What is Delta Sharing?

Delta Sharing is an open protocol developed by Databricks. It is used for secure data sharing with other organizations. It is platform independent sharing. Azure Databricks builds Delta Sharing into its Unity Catalog data governance platform.

There are two ways for sharing data.

- Open sharing – This allow an user to share data with any user, irrespective of their access to Azure Databricks.

- Databricks-to-Databricks sharing – This allow an user to share data with Azure Databricks users with access to a Unity Catalog metastore that is different from sharing user.