If you are a fresher and looking for a free spark tutorial you are in right page. In this article I am going to provide a Step-by-Step Tutorials on how to use PySpark and Azure Databricks for Data Analytics and Machine Learning purpose. Let us start from basics and gradually we will proceed for advance concepts of PySpark and Azure Databricks platform in this article.

What is PySpark?

Spark itself is a fast and general-purpose cluster computing system that provides in-memory processing capabilities, allowing it to handle large data sets with impressive speed. PySpark adds the Python API to Spark, making it accessible and convenient for Python programmers.

PySpark is an open-source distributed data processing framework built on top of Apache Spark and designed to work with Python.

PySpark allows developers and data scientists to efficiently process large-scale data sets in a distributed and parallel manner, enabling them to perform data analysis, data manipulation, and machine learning tasks on big data. Its ability to handle massive data sets efficiently and its compatibility with Python have made PySpark increasingly popular in the big data and data science communities.

PySpark Features:

PySpark offers several powerful features that make it a popular choice for distributed data processing and analysis. Some of its key features include:

- Distributed Data Processing: PySpark allows data to be processed in a distributed and parallel manner across a cluster of machines. This enables efficient handling of large-scale data sets and speeds up data processing tasks.

- In-Memory Computation: Spark, on which PySpark is built, supports in-memory data storage, which allows iterative data processing and significantly reduces the need for disk I/O, leading to faster data processing.

- Fault Tolerance: PySpark provides fault tolerance by keeping track of the lineage of resilient distributed datasets (RDDs). If a node fails during processing, the lost data can be reconstructed using this lineage information, ensuring data reliability.

- Python API: PySpark offers a Python programming interface, making it accessible to Python developers and data scientists who can leverage their Python skills for big data processing and analysis.

- Broad Data Source Support: PySpark supports various data sources, including HDFS, Apache HBase, Apache Hive, Apache Cassandra, JSON, CSV, and more, enabling seamless integration with different data storage systems.

- High-Level Abstractions: PySpark provides high-level abstractions like DataFrames and Datasets, which make it easier to work with structured data and offer optimizations under the hood.

- Integrated Libraries: PySpark integrates with popular libraries for data analysis and machine learning, such as NumPy, Pandas, MLlib, and Scikit-learn, enhancing its capabilities for advanced data analytics and modeling.

- Interactive Shell (PySpark Shell): PySpark provides an interactive shell that allows users to experiment and prototype data processing tasks quickly and efficiently.

- Streaming Capabilities: Spark Streaming, an extension of Spark, enables real-time data processing, making PySpark suitable for applications requiring low-latency processing of data streams.

- Scalability: PySpark’s distributed nature allows it to scale horizontally by adding more nodes to the cluster, ensuring that it can handle increasing data volumes and computation demands.

Simple PySpark Program

Below is a simple PySpark program that demonstrates how to create a Spark DataFrame, perform basic operations on it, and show the results.

# Import required libraries

from pyspark.sql import SparkSession

# Create a SparkSession

spark = SparkSession.builder \

.appName("Simple PySpark Tutorial") \

.getOrCreate()

# Sample data in the form of a list of dictionaries

data = [

{"name": "Asim", "age": 30},

{"name": "Buddhadeb", "age": 25},

{"name": "Sujoy", "age": 35},

{"name": "Debashis", "age": 22}

]

# Create a DataFrame from the data

df = spark.createDataFrame(data)

# Show the DataFrame

print("Data in DataFrame:")

df.show()

# Perform basic operations on the DataFrame

print("Basic DataFrame Operations:")

# Selecting columns

df.select("name").show()

# Filtering data

df.filter(df.age > 25).show()

# Grouping and aggregating

df.groupBy("age").count().show()

# Adding a new column

df.withColumn("age_in_5_years", df.age + 5).show()

# Stop the SparkSession

spark.stop()

You can contact us in WhatsApp for getting more details about our Azure Data Scientist Associate Certification Training in Kolkata.

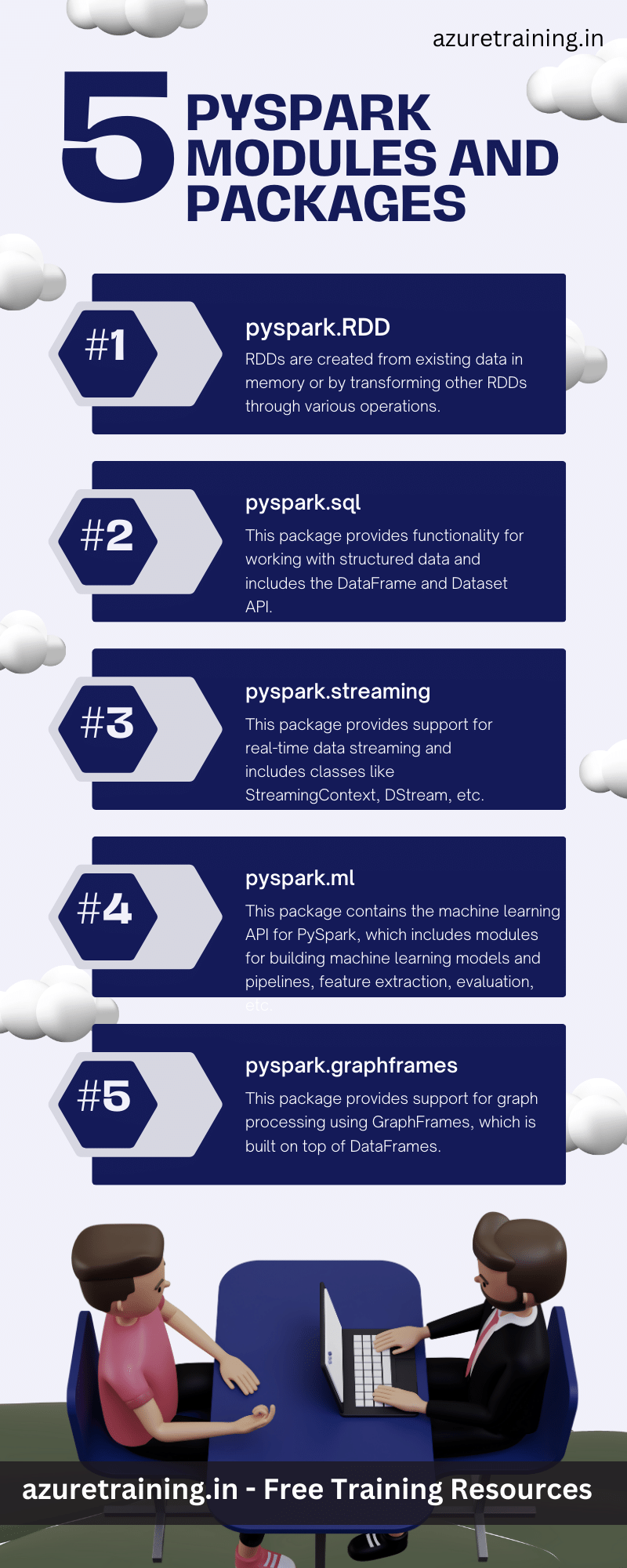

PySpark Modules & Packages

In PySpark, packages and modules serve important functions in organizing and providing functionality for distributed data processing and analytics. Let’s understand the roles of packages and modules:

- Packages:

- Packages in PySpark are collections of related modules that are organized together for a specific purpose or functionality.

- They provide a way to group related components, classes, and functions, making it easier to manage and organize code.

- Packages often represent higher-level functionalities or libraries within PySpark, providing specialized capabilities for specific tasks.

- PySpark includes several built-in packages, such as

pyspark.sql,pyspark.streaming,pyspark.ml, etc., each serving a specific area of data processing or analysis.

- Modules:

- Modules in PySpark are individual files that contain Python code, usually with classes, functions, and variables relevant to a specific task.

- They provide a way to break down the code into smaller, manageable units, making the codebase more maintainable and reusable.

- Modules can be imported into other Python scripts, notebooks, or modules, allowing the reuse of functions and classes defined in them.

- PySpark comes with several built-in modules that provide core functionalities, such as

pyspark.sql.functions,pyspark.sql.types,pyspark.ml.feature, etc. - Users can also create their own modules to encapsulate custom functionality or group related code for specific use cases.

The interplay between packages and modules helps organize the PySpark library and its functionalities efficiently. For example, the pyspark.sql package contains modules related to working with structured data, like DataFrame, Dataset, SQLContext, etc. Similarly, the pyspark.ml package contains modules related to machine learning, such as Pipeline, Estimators, and Transformers.

Here are some of the core packages and modules available in PySpark

pyspark: This is the main package that contains the PySpark API and core functionality. It includes modules likeSparkContext,SparkConf,SparkSession, etc., which are essential for interacting with the Spark cluster and performing distributed data processing.pyspark.sql: This package provides functionality for working with structured data and includes theDataFrameandDatasetAPI. It also contains modules for working with SQL and Hive, such asSparkSession,DataFrameReader,DataFrameWriter, etc.pyspark.streaming: This package provides support for real-time data streaming and includes classes likeStreamingContext,DStream, etc.pyspark.ml: This package contains the machine learning API for PySpark, which includes modules for building machine learning models and pipelines, feature extraction, evaluation, etc.pyspark.mllib: While it is not actively being developed, this package contains the older machine learning API for PySpark, which includes algorithms for classification, regression, clustering, etc.pyspark.graphframes: This package provides support for graph processing using GraphFrames, which is built on top of DataFrames.pyspark.RDD: This is the main class representing an RDD in PySpark. RDDs are created from existing data in memory or by transforming other RDDs through various operations.pyspark.sql.types: This module includes classes for defining data types when working with DataFrames.pyspark.streaming.kafka: This module provides support for integrating PySpark Streaming with Apache Kafka.

PySpark RDD – Resilient Distributed Dataset

In PySpark, RDD (Resilient Distributed Dataset) is a fundamental data structure that represents an immutable, distributed collection of objects that can be processed in parallel across a cluster. RDDs provide fault tolerance through lineage information, which allows lost data to be reconstructed in case of node failures.

Some of the key components and functions available in the RDD module:

pyspark.RDD: This is the main class representing an RDD in PySpark. RDDs are created from existing data in memory or by transforming other RDDs through various operations.- Transformations: RDDs support various transformations that produce a new RDD from an existing one. Some common transformations include

map,filter,flatMap,reduceByKey,groupByKey,join, etc. - Actions: Actions are operations that return non-RDD values or write data to an external system. Examples of actions are

collect,count,reduce,foreach,saveAsTextFile,saveAsParquetFile, etc. - Persistence: The RDD module provides methods for persisting RDDs in memory or on disk to avoid redundant re-computations. Common methods for persistence are

cacheandpersist. - Partitioning: RDDs are divided into partitions, and the RDD module includes functions to control the number of partitions and to perform custom partitioning if needed.

- Lineage: RDDs maintain the information about the transformations that created them. This lineage information allows Spark to recover lost data and achieve fault tolerance.

- Parallelism: RDDs can be processed in parallel across a cluster of machines, enabling distributed data processing.

- Data Sources: The RDD module also includes methods to read data from external sources such as Hadoop Distributed File System (HDFS), Apache HBase, Apache Hive, etc.

While RDDs were the primary data structure in earlier versions of PySpark, they have been partially superseded by DataFrames and Datasets in later versions due to the more convenient and optimized APIs they provide. However, RDDs still remain important for low-level, fine-grained control over distributed data processing tasks and for compatibility with older Spark applications.

FAQs:

-

What is PySpark, and why is it popular for big data processing? Answer: PySpark is an open-source distributed data processing framework built on Apache Spark and designed to work with Python. It is popular for big data processing due to its ability to efficiently handle large-scale data sets in a distributed and parallel manner, offering high-speed data processing and fault tolerance.

-

Do I need prior experience in Apache Spark to follow the tutorials? Answer: No, these tutorials are designed for beginners with little to no prior experience in Apache Spark or PySpark. We’ll start with the basics and gradually introduce more advanced concepts, making it accessible for newcomers to get hands-on experience.

-

What kind of data processing tasks will I learn in the tutorials? Answer: The tutorials cover a wide range of data processing tasks, including data cleaning, filtering, aggregations, joining datasets, and working with structured and semi-structured data. You’ll also be introduced to Spark’s DataFrame API for structured data manipulation.

-

Are there any prerequisites or software requirements to follow the tutorials? Answer: You will need to have Python and PySpark installed on your system. We’ll provide instructions on how to set up PySpark in your environment. Familiarity with Python programming basics will be helpful, but we’ll provide explanations for new learners.

-

Will the tutorials cover machine learning with PySpark? Answer: While the focus of these tutorials is on basic data processing with PySpark, we’ll touch on the machine learning capabilities. You’ll learn how to use PySpark’s MLlib library for simple machine learning tasks, giving you a taste of its potential in the world of data science and analytics.